According to Google Scholar, David Blei’s first topic modeling paper has received 3,540 citations since 2003. Everybody’s talking about topic models. Seriously, I’m afraid of visiting my parents this Hanukkah and hearing them ask “Scott… what’s this topic modeling I keep hearing all about?” They’re powerful, widely applicable, easy to use, and difficult to understand — a dangerous combination.

Since shortly after Blei’s first publication, researchers have been looking into the interplay between networks and topic models. This post will be about that interplay, looking at how they’ve been combined, what sorts of research those combinations can drive, and a few pitfalls to watch out for. I’ll bracket the big elephant in the room until a later discussion, whether these sorts of models capture the semantic meaning for which they’re often used. This post also attempts to introduce topic modeling to those not yet fully converted aware of its potential.

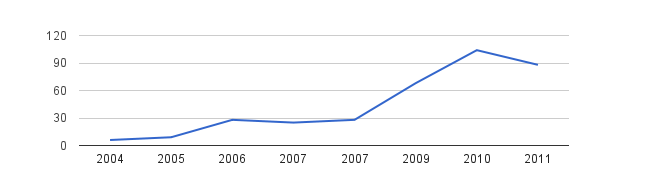

Citations to Blei (2003) from ISI Web of Science. There are even two citations already from 2012; where can I get my time machine?

A brief history of topic modeling

In my recent post on IU’s awesome alchemy project, I briefly mentioned Latent Semantic Analysis (LSA) and Latent Dirichlit Allocation (LDA) during the discussion of topic models. They’re intimately related, though LSA has been around for quite a bit longer. Without getting into too much technical detail, we should start with a brief history of LSA/LDA.

The story starts, more or less, with a tf-idf matrix. Basically, tf-idf ranks words based on how important they are to a document within a larger corpus. Let’s say we want a list of the most important words for each article in an encyclopedia.

Our first pass is obvious. For each article, just attach a list of words sorted by how frequently they’re used. The problem with this is immediately obvious to anyone who has looked at word frequencies; the top words in the entry on the History of Computing would be “the,” “and,” “is,” and so forth, rather than “turing,” “computer,” “machines,” etc. The problem is solved by tf-idf, which scores the words based on how special they are to a particular document within the larger corpus. Turing is rarely used elsewhere, but used exceptionally frequently in our computer history article, so it bubbles up to the top.

LSA and pLSA

LSA utilizes these tf-idf scores 1 within a larger term-document matrix. Every word in the corpus is a different row in the matrix, each document has its own column, and the tf-idf score lies at the intersection of every document and word. Our computing history document will probably have a lot of zeroes next to words like “cow,” “shakespeare,” and “saucer,” and high marks next to words like “computation,” “artificial,” and “digital.” This is called a sparse matrix because it’s mostly filled with zeroes; most documents use very few words related to the entire corpus.

With this matrix, LSA uses singular value decomposition to figure out how each word is related to every other word. Basically, the more often words are used together within a document, the more related they are to one another. 2 It’s worth noting that a “document” is defined somewhat flexibly. For example, we can call every paragraph in a book its own “document,” and run LSA over the individual paragraphs.

To get an idea of the sort of fantastic outputs you can get with LSA, do check out the implementation over at The Chymistry of Isaac Newton.

The method was significantly improved by Puzicha and Hofmann (1999), who did away with the linear algebra approach of LSA in favor of a more statistically sound probabilistic model, called probabilistic latent semantic analysis (pLSA). Now is the part of the blog post where I start getting hand-wavy, because explaining the math is more trouble than I care to take on in this introduction.

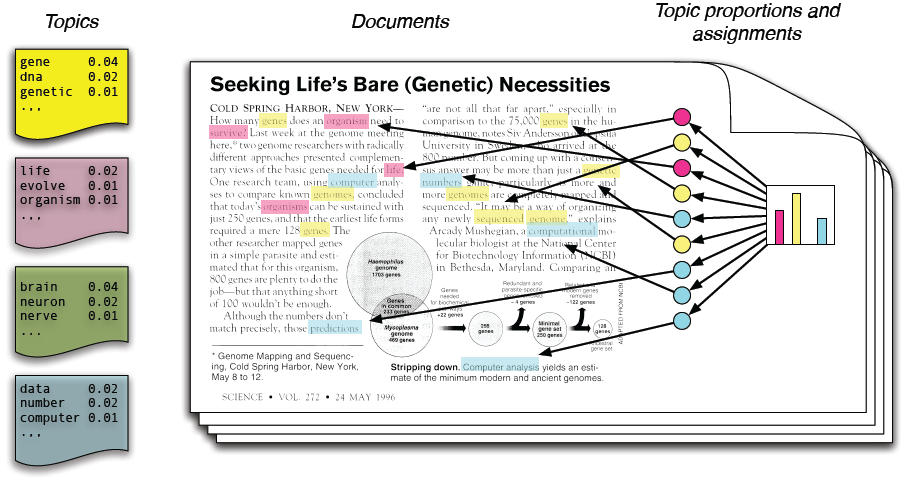

Essentially, pLSA imagines an additional layer between words and documents: topics. What if every document isn’t just a set of words, but a set of topics? In this model, our encyclopedia article about computing history might be drawn from several topics. It primarily draws from the big platonic computing topic in the sky, but it also draws from the topics of history, cryptography, lambda calculus, and all sorts of other topics to a greater or lesser degree.

Now, these topics don’t actually exist anywhere. Nobody sat down with the encyclopedia, read every entry, and decided to come up with the 200 topics from which every article draws. pLSA infers topics based on what will hereafter be referred to as black magic. Using the dark arts, pLSA “discovers” a bunch of topics, attaches them to a list of words, and classifies the documents based on those topics.

LDA

Blei et al. (2003) vastly improved upon this idea by turning it into a generative model of documents, calling the model Latent Dirichlet allocation (LDA). By this time, as well, some sounder assumptions were being made about the distribution of words and document length — but we won’t get into that. What’s important here is the generative model.

Imagine you wanted to write a new encyclopedia entry, let’s say about digital humanities. Well, we now know there are three elements that make up that process, right? Words, topics, and documents. Using these elements, how would you go about writing this new article on digital humanities?

First off, let’s figure out what topics our article will consist of. It probably draws heavily from topics about history, digitization, text analysis, and so forth. It also probably draws more weakly from a slew of other topics, concerning interdisciplinarity, the academy, and all sorts of other subjects. Let’s go a bit further and assign weights to these topics; 22% of the document will be about digitization, 19% about history, 5% about the academy, and so on. Okay, the first step is done!

Now it’s time to pull out the topics and start writing. It’s an easy process; each topic is a bag filled with words. Lots of words. All sorts of words. Let’s look in the “digitization” topic bag. It includes words like “israel” and “cheese” and “favoritism,” but they only appear once or twice, and mostly by accident. More importantly, the bag also contains 157 appearances of the word “TEI,” 210 of “OCR,” and 73 of “scanner.”

So here you are, you’ve dragged out your digitization bag and your history bag and your academy bag and all sorts of other bags as well. You start writing the digital humanities article by reaching into the digitization bag (remember, you’re going to reach into that bag for 22% of your words), and you pull out “OCR.” You put it on the page. You then reach for the academy bag and reach for a word in there (it happens to be “teaching,”) and you throw that on the page as well. Keep doing that. By the end, you’ve got a document that’s all about the digital humanities. It’s beautiful. Send it in for publication.

Alright, what now?

So why is the generative nature of the model so important? One of the key reasons is the ability to work backwards. If I can generate an (admittedly nonsensical) document using this model, I can also reverse the process an infer, given any new document and a topic model I’ve already generated, what the topics are that the new document draws from.

Another factor contributing to the success of LDA is the ability to extend the model. In this case, we assume there are only documents, topics, and words, but we could also make a model that assumes authors who like particular topics, or assumes that certain documents are influenced by previous documents, or that topics change over time. The possibilities are endless, as evidenced by the absurd number of topic modeling variations that have appeared in the past decade. David Mimno has compiled a wonderful bibliography of many such models.

While the generative model introduced by Blei might seem simplistic, it has been shown to be extremely powerful. When a newcomer sees the results of LDA for the first time, they are immediately taken by how intuitive they seem. People sometimes ask me “but didn’t it take forever to sit down and make all the topics?” thinking that some of the magic had to be done by hand. It wasn’t. Topic modeling yields intuitive results, generating what really feels like topics as we know them 3, with virtually no effort on the human side. Perhaps it is the intuitive utility that appeals so much to humanists.

Topic Modeling and Networks

Topic models can interact with networks in multiple ways. While a lot of the recent interest in digital humanities has surrounded using networks to visualize how documents or topics relate to one another, the interfacing of networks and topic modeling initially worked in the other direction. Instead of inferring networks from topic models, many early (and recent) papers attempt to infer topic models from networks.

Topic Models from Networks

The first research I’m aware of in this niche was from McCallum et al. (2005). Their model is itself an extension of an earlier LDA-based model called the Author-Topic Model (Steyvers et al., 2004), which assumes topics are formed based on the mixtures of authors writing a paper. McCallum et al. extended that model for directed messages in their Author-Recipient-Topic (ART) Model. In ART, it is assumed that topics of letters, e-mails or direct messages between people can be inferred from knowledge of both the author and the recipient. Thus, ART takes into account the social structure of a communication network in order to generate topics. In a later paper (McCallum et al., 2007), they extend this model to one that infers the roles of authors within the social network.

Dietz et al. (2007) created a model that looks at citation networks, where documents are generated by topical innovation and topical inheritance via citations. Nallapati et al. (2008) similarly creates a model that finds topical similarity in citing and cited documents, with the added ability of being able to predict citations that are not present. Blei himself joined the fray in 2009, creating the Relational Topic Model (RTM) with Jonathan Chang, which itself could summarize a network of documents, predict links between them, and predict words within them. Wang et al. (2011) created a model that allows for “the joint analysis of text and links between [people] in a time-evolving social network.” Their model is able to handle situations where links exist even when there is no similarity between the associated texts.

Networks from Topic Models

Some models have been made that infer networks from non-networked text. Broniatowski and Magee (2010 & 2011) extended the Author-Topic Model, building a model that would infer social networks from meeting transcripts. They later added temporal information, which allowed them to infer status hierarchies and individual influence within those social networks.

Many times, however, rather than creating new models, researchers create networks out of topic models that have already been run over a set of data. There are a lot of benefits to this approach, as exemplified by the Newton’s Chymistry project highlighted earlier. Using networks, we can see how documents relate to one another, how they relate to topics, how topics are related to each other, and how all of those are related to words.

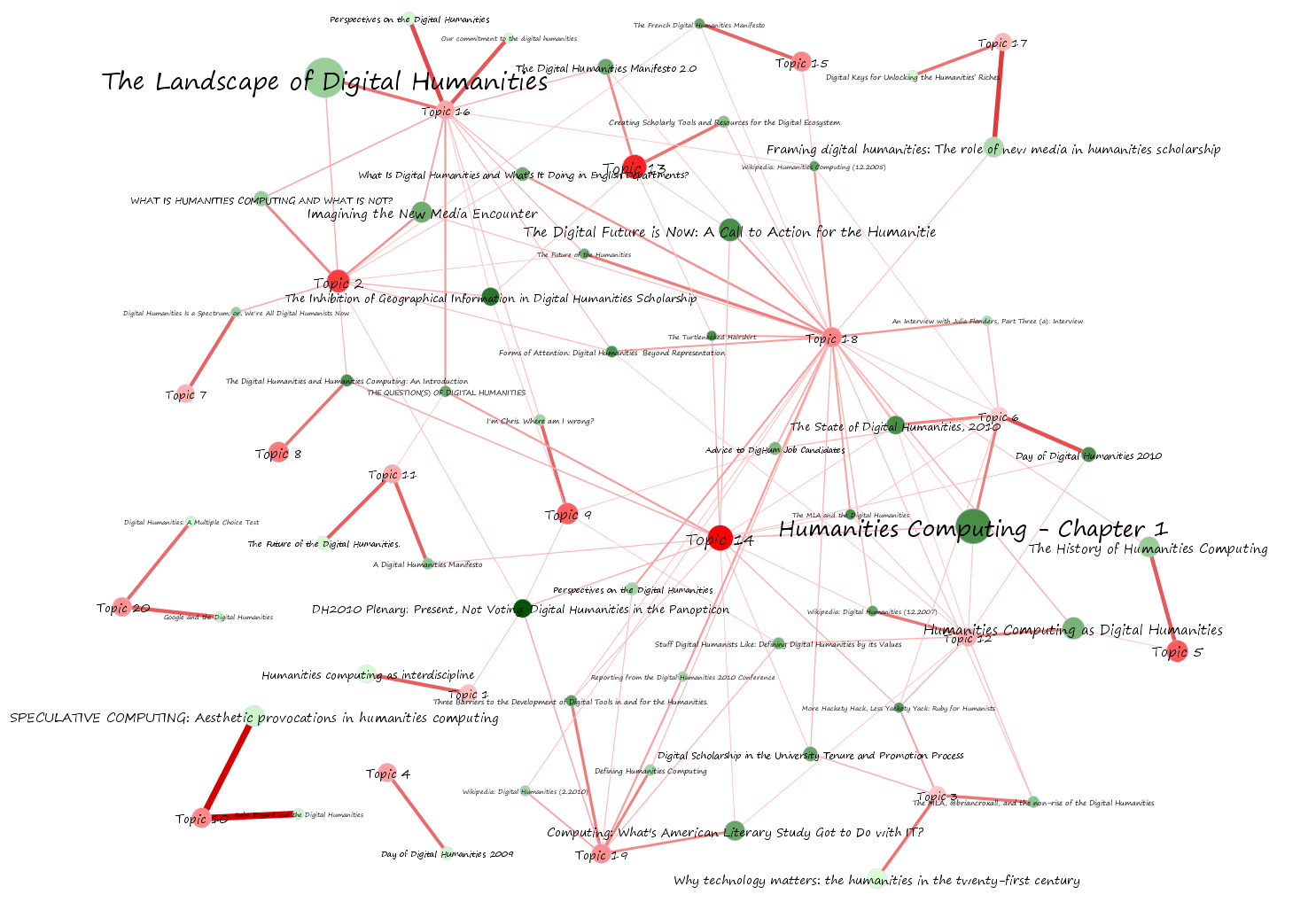

Elijah Meeks created a wonderful example combining topic models with networks in Comprehending the Digital Humanities. Using fifty texts that discuss humanities computing, Elijah created a topic model of those documents and used networks to show how documents, topics, and words interacted with one another within the context of the digital humanities.

Network generated by Elijah Meeks to show how digital humanities documents relate to one another via the topics they share.

Elijah Jeff Drouin has also created networks of topic models in Proust, as reported by Elijah.

Peter Leonard recently directed me to TopicNets, a project that combines topic modeling and network analysis in order to create an intuitive and informative navigation interface for documents and topics. This is a great example of an interface that turns topic modeling into a useful scholarly tool, even for those who know little-to-nothing about networks or topic models.

If you want to do something like this yourself, Shawn Graham recently posted a great tutorial on how to create networks using MALLET and Gephi quickly and easily. Prepare your corpus of text, get topics with MALLET, prune the CSV, make a network, visualize it! Easy as pie.

Networks can be a great way to represent topic models. Beyond simple uses of navigation and relatedness as were just displayed, combining the two will put the whole battalion of network analysis tools at the researcher’s disposal. We can use them to find communities of similar documents, pinpoint those documents that were most influential to the rest, or perform any of a number of other workflows designed for network analysis.

As with anything, however, there are a few setbacks. Topic models are rich with data. Every document is related to every other document, if some only barely. Similarly, every topic is related to every other topic. By deciding to represent document similarity over a network, you must make the decision of precisely how similar you want a set of documents to be if they are to be linked. Having a network with every document connected to every other document is scarcely useful, so generally we’ll make our decision such that each document is linked to only a handful of others. This allows for easier visualization and analysis, but it also destroys much of the rich data that went into the topic model to begin with. This information can be more fully preserved using other techniques, such as multidimensional scaling.

A somewhat more theoretical complication makes these network representations useful as a tool for navigation, discovery, and exploration, but not necessarily as evidentiary support. Creating a network of a topic model of a set of documents piles on abstractions. Each of these systems comes with very different assumptions, and it is unclear what complications arise when combining these methods ad hoc.

Getting Started

Although there may be issues with the process, the combination of topic models and networks is sure to yield much fruitful research in the digital humanities. There are some fantastic tutorials out there for getting started with topic modeling in the humanities, such as Shawn Graham’s post on Getting Started with MALLET and Topic Modeling, as well as on combining them with networks, such as this post from the same blog. Shawn is right to point out MALLET, a great tool for starting out, but you can also find the code used for various models on many of the model-makers’ academic websites. One code package that stands out is Chang’s implementation of LDA and related models in R.

Notes:

- Ted Underwood rightly points out in the comments that other scoring systems are often used in lieu of tf-idf, most frequently log entropy. ↩

- Yes yes, this is a simplification of actual LSA, but it’s pretty much how it works. SVD reduces the size of the matrix to filter out noise, and then each word row is treated as a vector shooting off in some direction. The vector of each word is compared to every other word, so that every pair of words has a relatedness score between them. Ted Underwood has a great blog post about why humanists should avoid the SVD step. ↩

- They’re not, of course. We’ll worry about that later. ↩

Excellent, clear post, and I really appreciate the links to the Isaac Newton Chymistry project and TopicNets. Very helpful.

I’m deeply into a variant of LSA at the moment, so I’m disproportionately interested in a couple of details that most people won’t care about. E.g., I’m not sure that most versions of LSA actually use tf-idf scores in the term-doc matrix. I think the more common version may use log-entropy weighting instead of tf-idf weighting.

I actually prefer a different weighting scheme that I haven’t seen used widely, which is basically Observed frequency – Expected frequency. I would also argue that literary scholars are better off skipping the Singular Value Decomposition step, for reasons explained here: http://tedunderwood.wordpress.com/2011/10/16/lsa-is-a-marvellous-tool-but-humanists-may-no-use-it-the-way-computer-scientists-do/

But to stop geeking out about LSA and return to the main point: very helpful post. I haven’t yet tried the generative methods (pLSA and LDA), because I’m so happy with LSA itself, but I know people are excited about them and I intend to compare results systematically at some point this winter.

Thanks! I think you’re right about the tf-idf weighting, I just figured that people just approaching LSA would be more familiar with tf-idf. I’ve added a note referencing your comment, though, because the standard certainly ought to be mentioned.

That’s a great post, I’ve never thought about the issues of SVD for the purposes of the humanities. While SVD in LSA is still useful for most of my historical retrieval needs, you make a very good point about humanists needing to think very carefully about the nitty-gritty details of algorithms that were built with other purposes in mind.

Good luck on your generative model exploration – while pLSA can technically be mathematically equivalent to LDA, it’s a lot more bothersome and misses some of LDA’s functionality, so I’d definitely recommend the latter. LDA and LSA definitely serve two very different purposes; for yours, outlined in the anti-SVD post, LSA is probably more well-suited.

Thanks for the comments… I feel like I’ve come to the DH-Text Analysis-Blog party late in the game, and I’ve been trying to read through yours to catch up!

Great post, Scott! I found the historical section particularly helpful for getting a sense of how topic modeling has evolved.

Thanks! Glad you found it useful.

Great post. I’ve been hoping that someone would explain how the extensibility of LDA makes it quite a different kind of beast (relative to LSA).

The original LDA paper is actually pretty good on this. Another good place is the 2010 Rosen-Zvi, M., Griffiths, T., Steyvers, M., & Smyth, P. expanded write-up of the author-topic model. Once you get a bit beyond LDA it’s clear there’s something being done that can’t be done with LSA. Here’s the citation:

Learning author-topic models from text corpora. M Rosen-Zvi, C Chemudugunta, T Griffiths, P Smyth, M Steyvers, ACM Transactions on Information Systems (TOIS), ACM, 2010. http://www.datalab.uci.edu/papers/AT_tois.pdf